Mixed Reality Conference (MIXR) 2021: How Augmented Reality Software Changes the Business Environment

Kirill Sharshakov, our Business Development Team Lead, participated in MIXR - one of the largest conferences for AR/VR professionals. How the mixed reality market changed over the past 18 months, how augmented reality software can help businesses cope with the pandemic and its consequences, and which new technologies are poised to disrupt the industry - read this article to find out!

About MIXR 2021

MIXR is an international conference for people working in augmented, virtual, and mixed reality. It was held in Moscow and has gathered over 500 participants and 70 speakers, including representatives of Microsoft, Epic Games, Unity, Huawei and Banuba.

The presenters and guests spread over five tracks according to their interests:

- Business. Discussing success stories, case studies, and best practices for moving into new markets and domains (eCommerce, manufacturing, etc.).

- Technologies. New approaches to solving problems and fresh revisions of the existing ones.

- Creative. Innovative ways to create augmented reality content and consume it.

- Gamedev. All kinds of immersive games, from mobile AR to room-scale VR.

- Education. The influence of immersive technologies on the learning process.

“The event is already unique because you don’t have to explain to people what is augmented reality software, virtual reality, and other immersive technologies. The “wow-effect” from the VR headsets and funny pictures on smartphones has passed, so most of the presentations had a very simple goal: to tell colleagues and other professionals how to earn money with this. This seems like a simple goal but it helps the industry a lot,” Andrei Ivashentsev, the organizer of the conference said.

While all the presentations were interesting and valuable, we just couldn’t cover them all in a single article. So we picked four of the most important ones and decided to go over them.

Quick and scalable creation of virtual humans

Mark Flanagan, an Epic Games Education Partner Manager (EMEA) presented the cloud-based technology that lets users create lifelike humans for use in various Unreal Engine (UE) projects.

Creating characters for games or animated movies is a complex task that requires the work of multiple specialists. These people should be skilled in anatomy, animation, rigging, and many other disciplines. And the resulting characters should be believable and stay away from the “uncanny valley”. This presents a challenge even for major studios, let alone smaller ones or independent creators.

Epic saw the opportunity here and acquired a couple of companies that had the technology to make character creation easier, quicker, and more scalable.

The result is a web-based application that uses AI and scanned people to generate lifelike human models that can then be modified and exported directly to UE or a 3D editor (e.g. Maya). On the surface, it looks like a character creation process from a role-playing game like Skyrim or Cyberpunk 2077: the user can tweak facial features, select hairstyles, and clothing, pick the body type, etc.

This tremendously decreases the time needed to create characters. The AAA-game quality becomes accessible even to the small indie studios and devs. Moreover, character creation through MetaHuman is scalable, which means populating virtual worlds is much easier than before.

The main target audience of MetaHuman is game developers and VFX studios. However, the technology can be potentially used by other people who need a lot of virtual humans quickly: architectural companies simulating crowds, automotive businesses, etc.

As MetaHuman is cloud-based, it can potentially be compatible with mobile augmented reality software as well.

And if you prefer Unity, check out our face tracking and animation tech.

Multi-user Snapchat lenses for higher engagement

Artem Petrov, Associate Creative Director, and Pavel Antonenko, Technical Program Manager at Snap, presented several new augmented reality software features.

The first is Connected Lenses - new functionality that lets multiple users share the same AR object and modify it in real-time. It is a solid way to increase user engagement and draw more people to the app.

So far Snap has partnered with Lego to allow people to work on various construction sets together. The players themselves could either be in the same room or a hemisphere apart - everything will work either way. In addition, the company has provided a way to create augmented reality filters so that the users are more engaged. However, the users already started adding their own connected lenses, for example, a ping-pong game.

Connected Lenses example. Source

Connected Lenses example. Source

“They [users] immediately pounce on the new features and start making cool effects,” Antonenko said.

The second highlight of the presentation was SnapML. Simply put, it lets creators make lenses with standard tools/frameworks and share them with the entire audience of Snapchat. Thanks to machine learning, it is now possible to include speech recognition, scan real objects, and integrate custom neural networks. One of the users, for example, created a lens that helps people select the right surfing board.

Not only this makes the app more attractive to new users, it helps people express themselves and bring value to the entire community.

Besides the technological additions, there were also two commercial ones. One is called Lens Analytica - a tool allowing lens creators to see the demographic and engagement stats of their products. Another is the Creator Marketplace - a way for lens-makers to monetize their work.

Virtual try-on for decreased returns and higher satisfaction

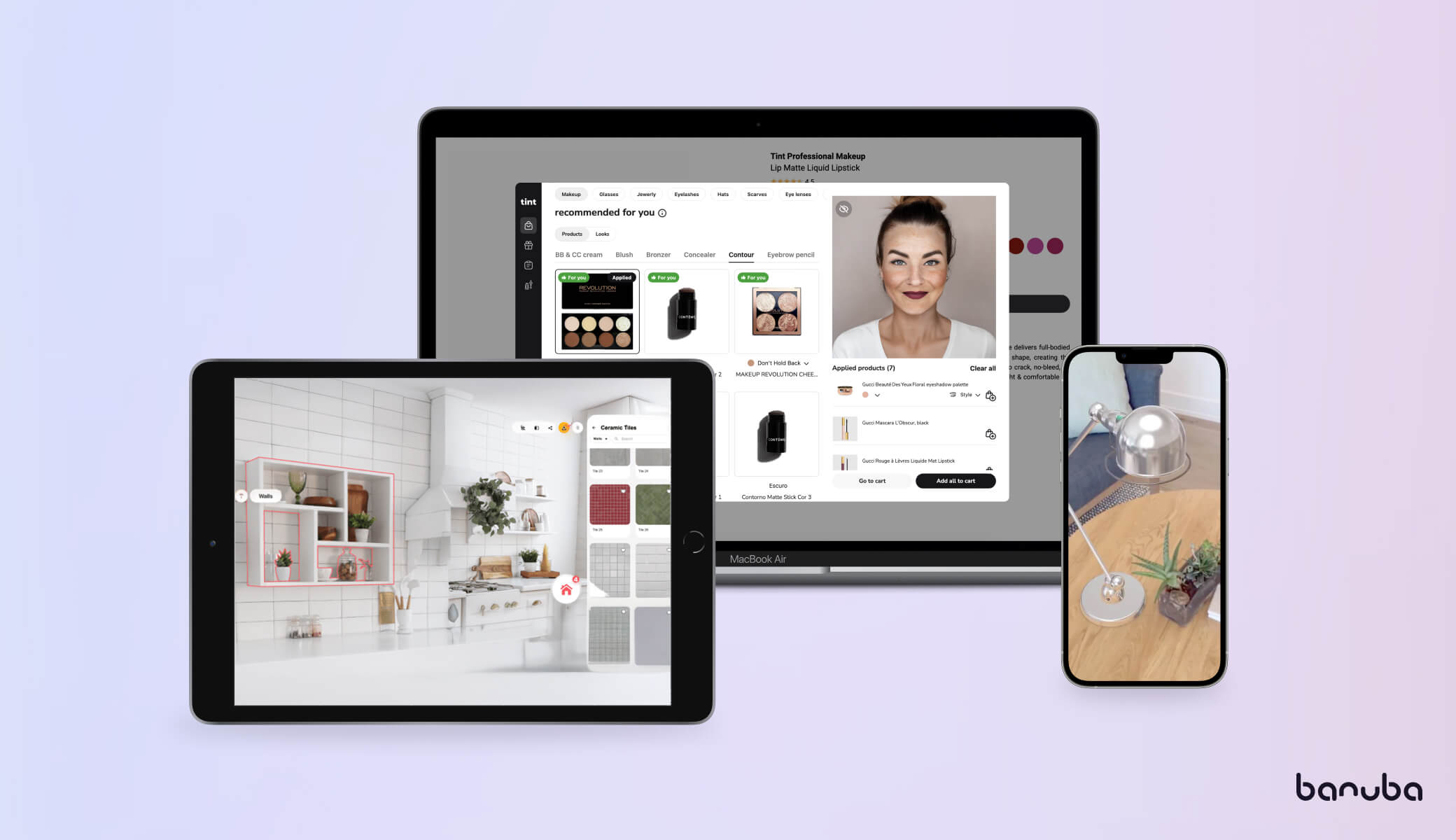

The presentation of Kirill Sharshakov, our Business development team lead, was dedicated to virtual try-on. This technology allows users to test products without actually touching them, which is especially important for makeup and is doubly valuable in times of the pandemic.

As we specialize in Face AR (augmented reality technologies related to face recognition and tracking), the core of the presentation was dedicated to headwear, jewelry, glasses, and other items worn on or around the face.

The first option for virtual try-on is the so-called “smart mirrors” - devices that look like ordinary mirrors but have augmented reality software installed on them. They are basically virtual fitting rooms: by selecting different items, the customers can quickly try many digital products on-screen and be confident that the physical ones will be no different.

The second option is a try-on built into a mobile, desktop, or web app. It lets people try new things whenever and wherever they find convenient. They don’t even have to leave their house. The app user can choose the goods they like and order them right away.

The current technology allows for lifelike representation of objects, including interactions with light (glimmer and glare), physics, and more. This means a high rate of customer satisfaction. For example, the returns for virtually tried sunglasses and shoes are under 2%.

Virtual try-on is already being used by mid-sized and large cosmetics manufacturers and retailers. Examples include Sephora, Ulta, and our clients Gucci and Looke.

If you want to learn more about the applications of Face AR in retail, feel free to take a look at our white paper on this topic.

Conclusion

Thanks to virtual and augmented reality software, many things are possible. Creating realistic digital humans, cooperative 3D games, lifelike virtual try-on, and effective educational games are just a few examples of what the technology already can do. And while you are here, feel free to try out our Face AR SDK that lets your app do many of the abovementioned things.